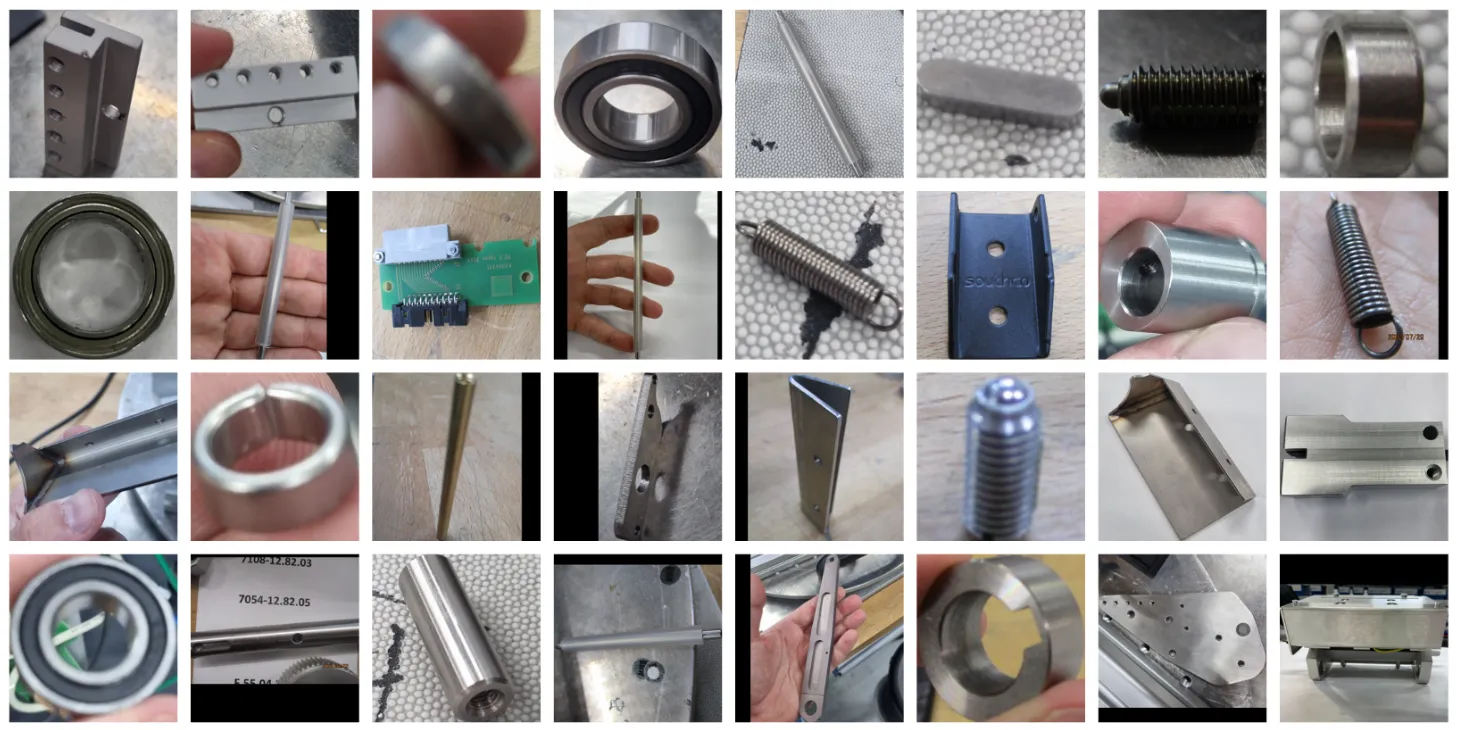

The goal of the SynthNet project was to develop generative neural approaches to create synthetic image data needed to build and optimize a visual search index. We generated all images from real-world, industry-grade 3D CAD data (SolidWorks) and used them for object identification. For each component, a set of views and depth data (RGB+D) of an object is automatically generated under different lighting and material conditions by respecting the object's metadata using the SynthNet Render Pipeline. We also used neural image augmentation to add backgrounds or distractors and performed style transfer to mimic real photos with our synthetic data. As a result, the Topex-Printer Dataset↗ has been released for public usage and marks the first CAD-to-real multi-domain industrial image dataset, comprising 102 machine parts.

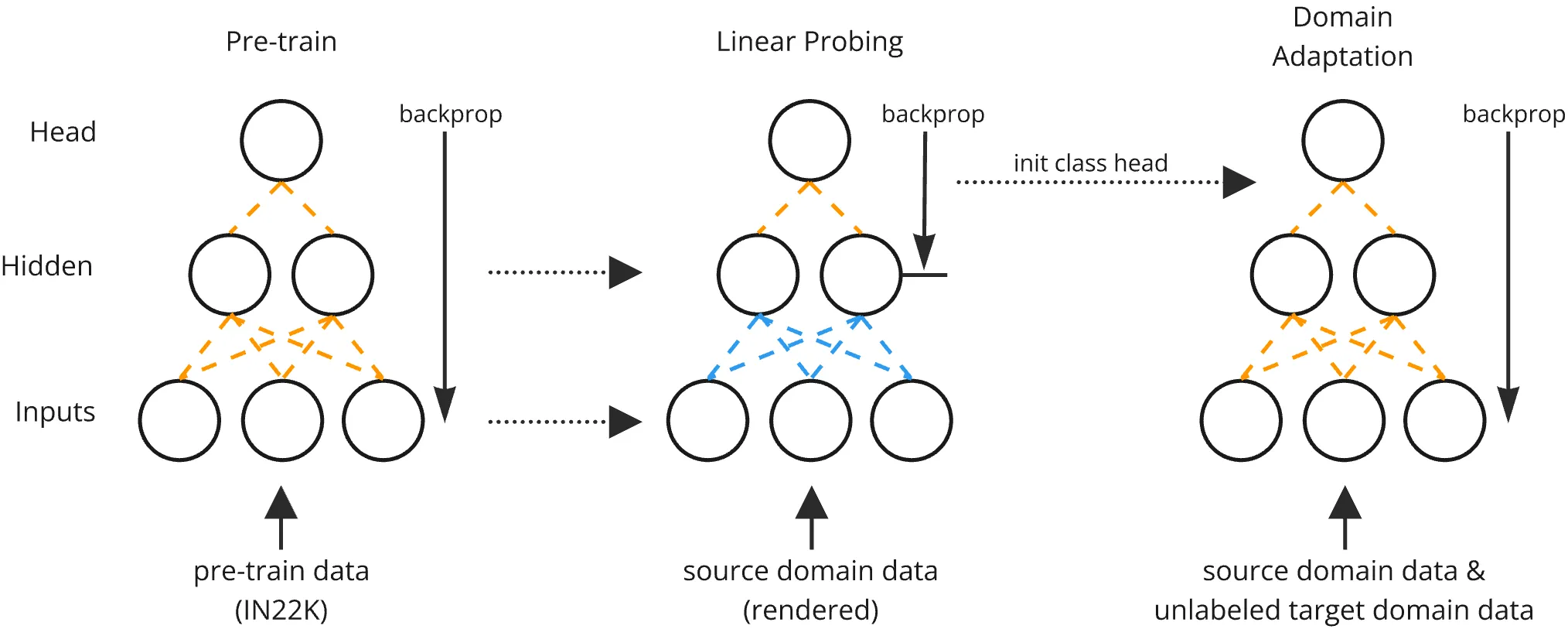

We used the Topex-Printer Dataset to develop a practical approach to Transfer Learning and Unsupervised Domain Adaptation (UDA) in industrial object classification. To be more precise, this means to use no labeled photos but only labeled synthetic images to train a classifier for real photos. For this challenge I developed the SynthNet Transfer Learning Framework, which allows rapid reproducible training configurations including models, hyperparameters, dataloaders, domain transfer techniques, feature fusion techniques, gradual unfreezing, and more. As a result, we developed a two-stage Transfer Learning UDA method, achieving state-of-the-art results on the VisDA-2017 benchmark dataset (93.47%). Our findings were presented at the ECML PKDD 2023 in Turin and published by Springer Nature. Check out the paper website↗.

Finally, our trained models were used as a feature extractor in the SynthNet Retrieval Pipeline to build a visual search index with our synthetic data using Facebook AI Similarity Search (FAISS) and query it with real photos to measure performance metrics (Acc, mAP, NDCG, MRR).

1. Init pre-trained model, 2. Train classification head on source domain data, 3. Train all layers on source domain data